Section: New Results

Pedestrian detection: Training set optimization

Participants : Remi Trichet, Javier Ortiz.

keywords: computer vision, pedestrian detection, classifier training, data selection, data generation, data weighting

The emphasis of our work is on data selection. Training for pedestrian detection is, indeed, a peculiar task. It aims to differentiate a few positive samples with relatively low intra-class variation and a swarm of negative samples picturing everything else present in the dataset. Consequently, the training set lacks discrimination and is highly imbalanced. Due to the possible creation of noisy data while oversampling, and the likely loss of information when undersampling, balancing positive and negative instances is a rarely addressed issue in the literature.

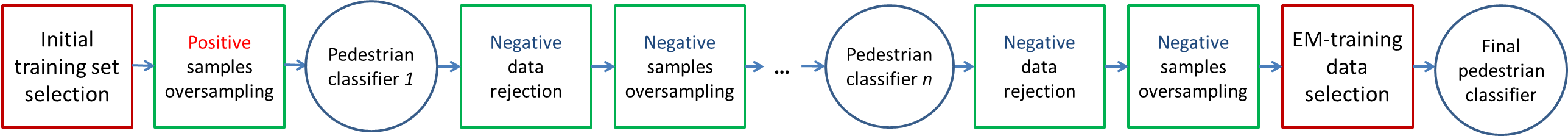

Bearing these data selection principles in mind, we introduce a new training methodology, grounded on a two-component contribution. First, it harnesses an expectation-maximization scheme to weight important training data for classification. Second, it improves the cascade-of-rejectors [105][54] classification by enforcing balanced train and validation sets every step of the way, and optimizing separately for recall and precision. A new data generation technique was developped for this purpose.

The training procedure unfolds as follows. After the initial data selection, we balance the negative and positive sample cardinalities. Then, a set of n negative data rejectors are trained and identified negative data are discarded. The validation set negative samples are iteratively oversampled after each training to ensure a balanced set. The final classifier is learned after careful data selection. Figure 9 illustrates the process.

Experiments carried out on the Inria [61] and PETS2009 [69] datasets, demonstrate the effectiveness of the approach, leading to a simple HoG-based detector to outperform most of its near real-time competitors.

| Method | Inria | Speed |

| HoG [61] | 46% | 21fps |

| DPM-v1 [68] | 44% | 1fps |

| HoG-LBP [98] | 39% | Not provided |

| MultiFeatures [100] | 36% | 1fps |

| FeatSynth [51] | 31% | 1fps |

| MultiFeatures+CSS [97] | 25% | No |

| FairTrain - HoG + Luv | 25% | 11fps |

| FairTrain - HoG | 25% | 16fps |

| Channel Features [65] | 21% | 0.5fps |

| FPDW [64] | 21% | 2-5fps |

| DPM-v2 [67] | 20% | 1fps |

| VeryFast [53] | 18% | 8fps(CPU) |

| VeryFast [53] | 18% | 135fps(GPU) |

| WordChannels [60] | 17% | 8fps(GPU) |

| Method | PETS2009 | Speed |

| Arsic [48] | 44% | n.a. |

| Alahi [46] | 73% | n.a. |

| Conte [59] | 85% | n.a. |

| FairTrain - HoG | 85.38% | 29fps |

| FairTrain - HoG + Luv | 85.49% | 18fps |

| Breitenstein [55] | 89% | n.a. |

| Yang [103] | 96% | n.a. |